The Lab “Platform Governance, Media, and Technology” (PGMT) does research, teaching and knowledge transfer at the intersection of governance, digital communication and new technologies. We are particularly interested in platforms and their governance, as well the discursive, political and technological construction of “artificial intelligence” (AI). This website is to showcase a repertoire of our activities. Please also visit our official page at our home institution, the Centre for Media, Communication and Information Research (ZeMKI), University of Bremen.

Research

The PGMT Lab does research, teaching and knowledge transfer at the intersection of governance, digital communication and new technologies.

Online Talk Series

We are hosting a monthly online conversation on empirical research on platform governance. Each session consists of a short input talk by a scholar, followed by ample time for discussions and exchange.

Platform Governance Archive

We are hosting the Platform Governance Archive (PGA) – a longitudinal data repository and interface on social media platforms’ policies and content moderations guidelines.

-

Mark Malone will present on Monday 29th as part of the “Equality, Wellbeing and Platform Governance” Project

As part of her YUFE research project on Equality, Wellbeing and Platform Governance, fellow Dr Paloma Viejo has invited researcher and activist Mark Malone who will present on Monday 29th April at 14:00hs in room SFG 1040 a talk about the emergence of reactionary and far right activity in Ireland, specifically on themes of anti-migrant,…

-

“Science Comics” Exhibition opens at Haus der Wissenschaft

PGMT member Dr. Dennis Redeker organizes an exhibition of science comics at the Haus der Wissenschaft in Bremen. The communication of scientific methods and findings to the general public has been breaking new ground for some years now. This now includes media formats that were previously rarely associated with scientific communication. So-called science comics initially only conveyed academic…

-

“Desinformationen und KI-generierte Fakes – rettet der Digital Services Act die Europawahl?” – Panel discussion in Brussels with lab member Prof. Dr Christian Katzenbach

Lab member Prof. Dr Christian Katzenbach will participate in a panel discussion on the role of platforms in the 2024 European elections in Brussels on 9 April 2024, starting at 6pm. The discussion will be held in German under the titel “Desinformationen und KI-generierte Fakes – rettet der Digital Services Act die Europawahl?” and can be…

-

Head of Lab Christian Katzenbach now Visiting Professor at LSE

Head of PGMT Lab Christian Katzenbach has started his position as visiting professor at the The London School of Economics and Political Science (LSE). During his stay until July 2024, Christian is working on topics ranging from AI discourses and regulation, to the role of social media platforms in society. At the department, he is teaching classes in…

-

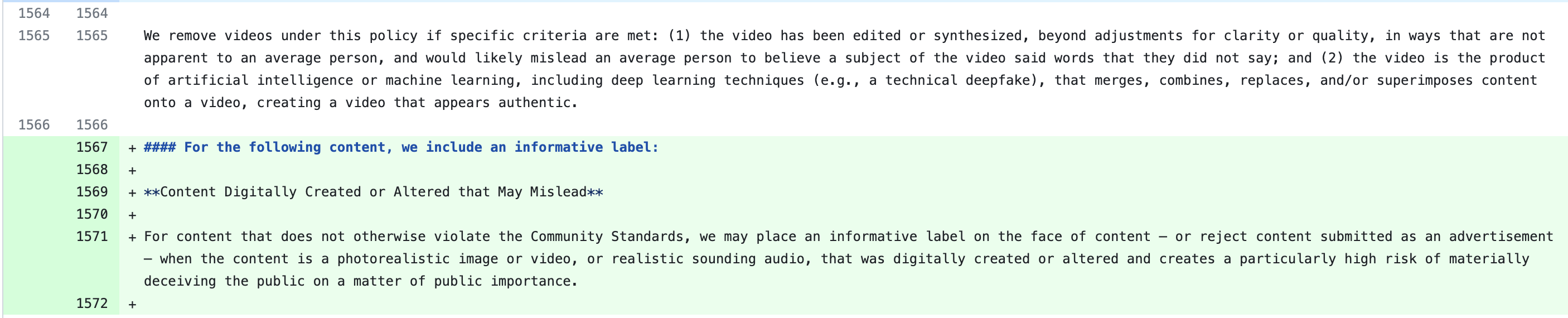

Meta to label AI generated content on Facebook, Instagram and Threads

Meta · Community Guidelines · February 12, 2024 Meta expands their misinformation policies for Facebook, Instagram and Threads to cover AI generated content. The updated Community Guidelines now include a subsection for “Content Digitally Created or Altered that May Mislead”. The company promises to create informative labels for artificially produced content that creates a particularly…

-

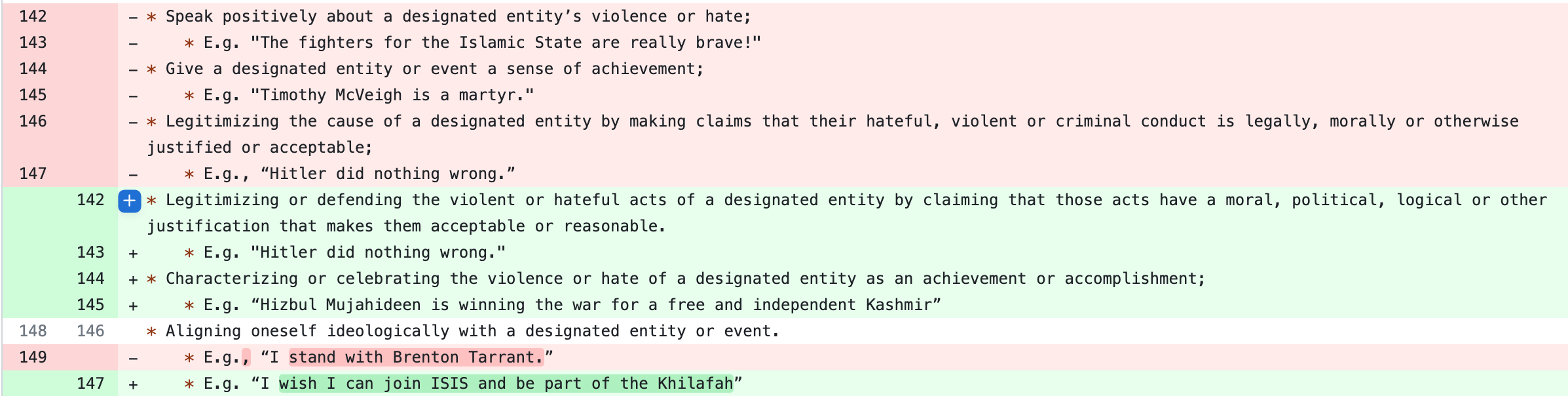

Meta redefines glorification of dangerous entities

Meta ‧ Community Guidelines · January 11, 2024 On 11 January, Meta updated the section of its Community Guidelines that outlines the principles for the treatment of dangerous organizations and individuals. Namely, the platform has changed the description of the policy rationale as well as the definition of the glorification of dangerous entities that included…

-

End of Year Frenzy at Meta: Multiple changes to Facebook’s and Instagram’s Community Guidelines

In the final weeks of 2023, major social media platforms have made a range of changes to their Community Guidelines. This peak of policy changes represents the highest period of activity registered by the Platform Governance Archive in the last year. The following blog post gives an overview of the changes that were picked up…

-

One Day in Content Moderation: Analyzing 24 h of Social Media Platforms’ Content Decisions through the DSA Transparency Database

We have examined one day of content moderation by social media platforms in the EU. This report examines how social media platforms moderated user content over a single day. It analyzes whether decisions were automated or manual, the visibility measures applied, and which content categories were most subject to moderation by specific platforms on that…