Meta · Community Guidelines · 17 October, 2023

*The following post may contain explicit language and content that could be distressing to some readers

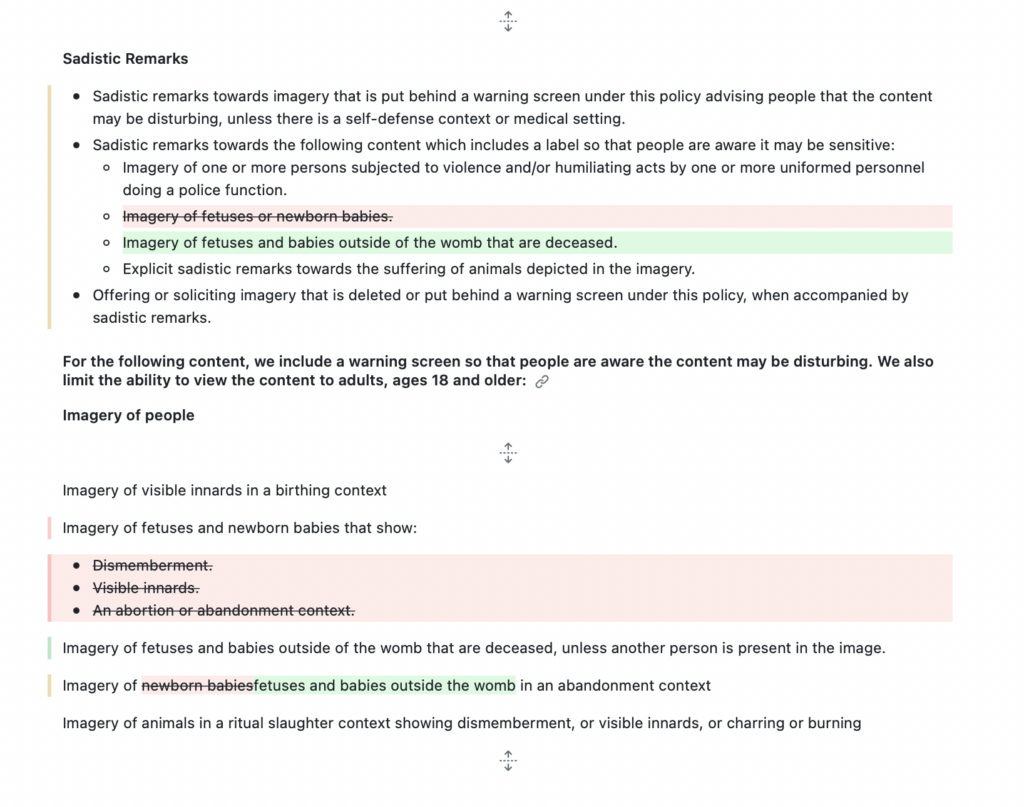

On October 17, Instagram and Facebook changed their Community Guidelines to specifically prohibit sadistic posts containing imagery of deceased babies. While before the corresponding policy section forbade the posting of “sadistic remarks” about “Imagery of fetuses or newborn babies”, it was modified to now explicitly address “Imagery of fetuses and babies outside of the womb that are deceased.”

Additionally, a change was made to the section on the labelling of “Violent and Graphic Content”. While Meta removed a sentence stating that a sensitive content label would be put on images of fetuses and newborn babies that show “Dismemberment”, “Visible innards” or “An abortion or abandonment context”, the section now specifically addresses “Imagery of fetuses and babies outside of the womb that are deceased, unless another person is present in the image”.

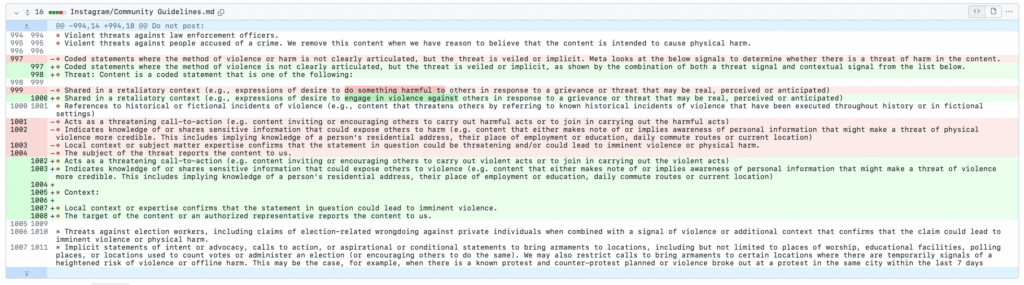

A week before, on October 11, the section on violence and incitement for both Facebook and Instagram had already undergone some changes. The word “harm” was in most cases replaced by “violence”. For example, threat is no longer considered as “a desire to do something harmful”, but as “a desire to engage in violence against others”.

These changes are happening in the context of the current Israel-Hamas war. Meta was one of the first major social media companies to explicitly announce special measures in this context. On October 7, the company reported removing or marking as disturbing more than 795,000 pieces of content in Hebrew and Arabic violating their rules. “Our policies are designed to keep people safe on our apps while giving everyone a voice”, says the company. However, Gaza (epicentre of the war) creators report shadow-banning and accuse Meta of restricting their content.

This opens up a question of platforms’ role in shaping online discussions in times of crisis. And this is probably also a critical moment for the EU Digital Services Act (DSA) that is aimed to keep social media platforms safe online environments. For instance, X (former Twitter) is currently under a DSA compliance investigation for spreading violent content and hate speech in the context of the Israeli–Palestinian conflict.